While the ChatGPT hype might not be so fervent anymore, many of us have internalised ChatGPT’s capabilities into our everyday life. I tried to provide it ten different types of tasks, and have provided some commentary on how we “might” get ChatGPT to work better for us. (In this post we play with the free GPT-3 engine so that we illustrate what you can get from the free version of ChatGPT on OpenAI, in case you don’t really like Bing.)

Let’s go!

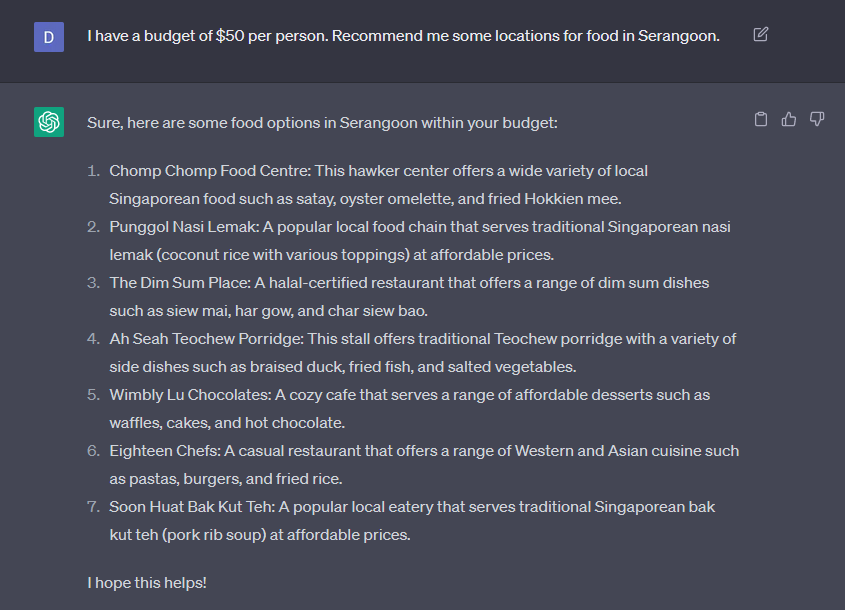

1. Searching for Food Options

In Singapore, we have a first-world problem. Too many food options, too little time to enumerate them all.

For all the flak thrown at ChatGPT’s list, it is useful, because it provides us a starting point to iterate our search.

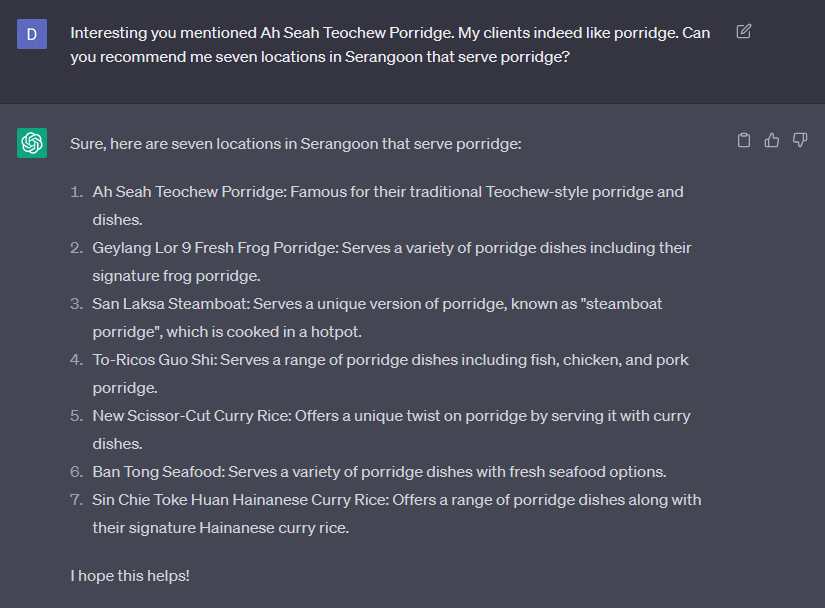

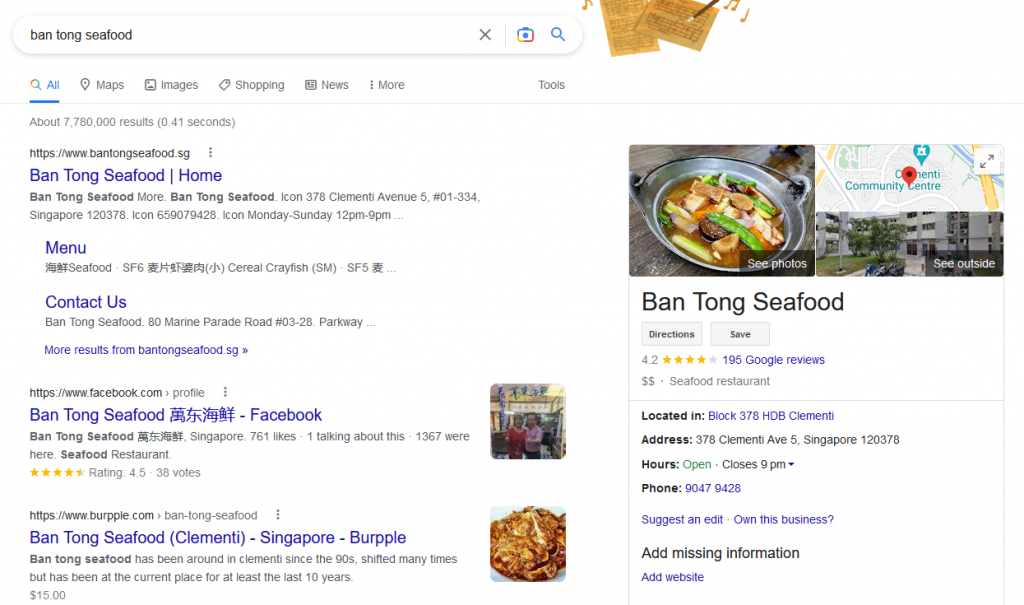

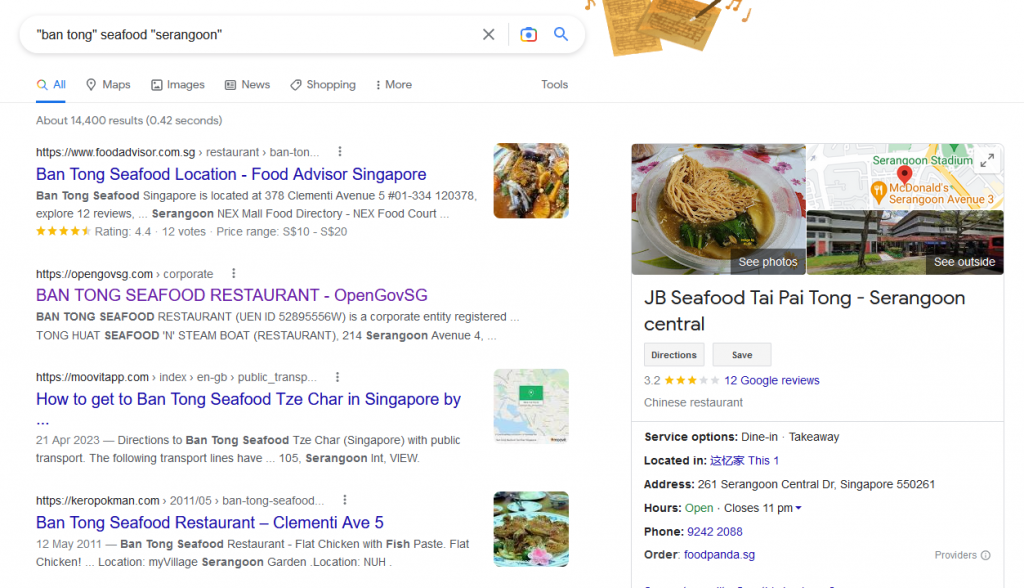

Now we have a slightly more curated list (but not very good). A cursory search of one of these entries reveals a new problem.

Let us check if there is a “Ban Tong seafood” in Serangoon. To do that, we shall force additional conditions on our Google search.

Did ChatGPT work? Good brainstorming tool, not useful at converging to a useful option (yet).

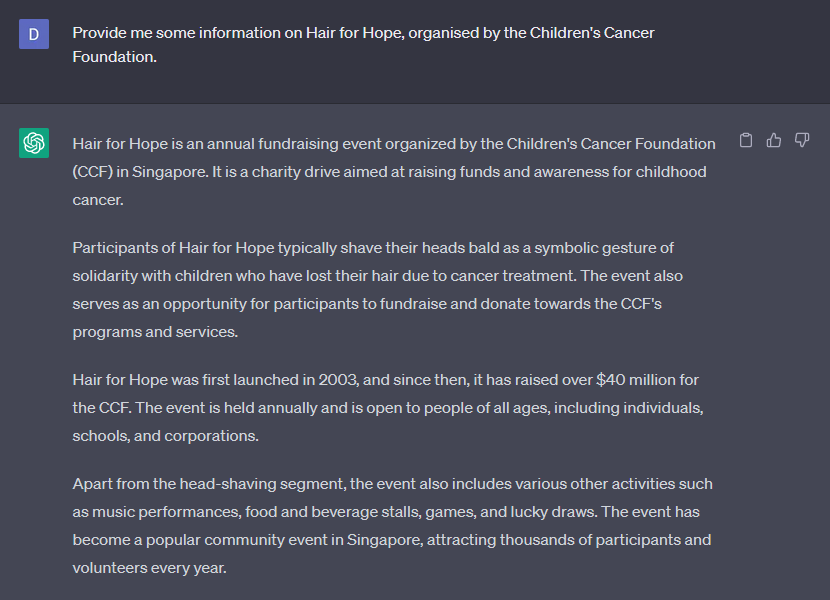

2. Checking on Events

Perhaps food is not ChatGPT’s strong suit, as an AI language model only eats light bytes. Let us now attempt to use ChatGPT as a research tool, since it appears to be of some use brainstorming.

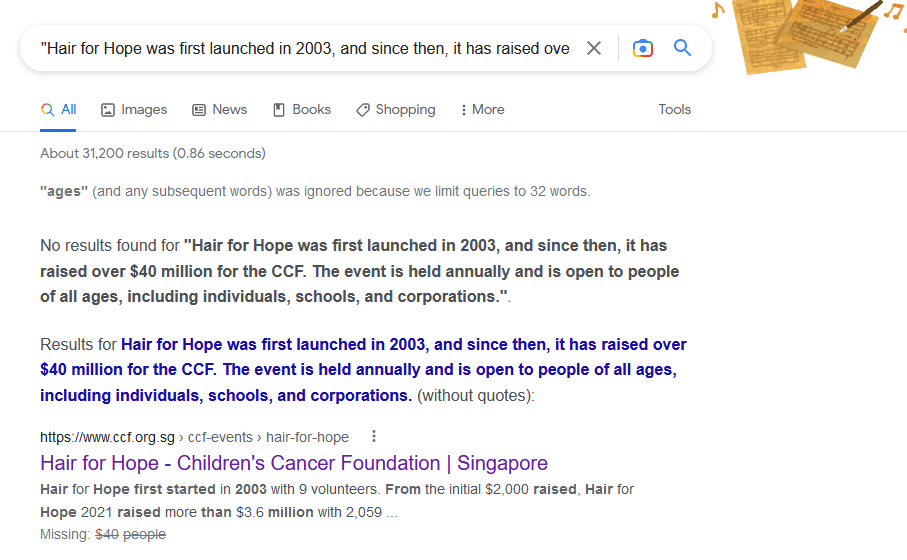

Some people have claimed that ChatGPT simply copies and pastes wholesale. We can make use of one of the paragraphs to identify if ChatGPT in fact, did that.

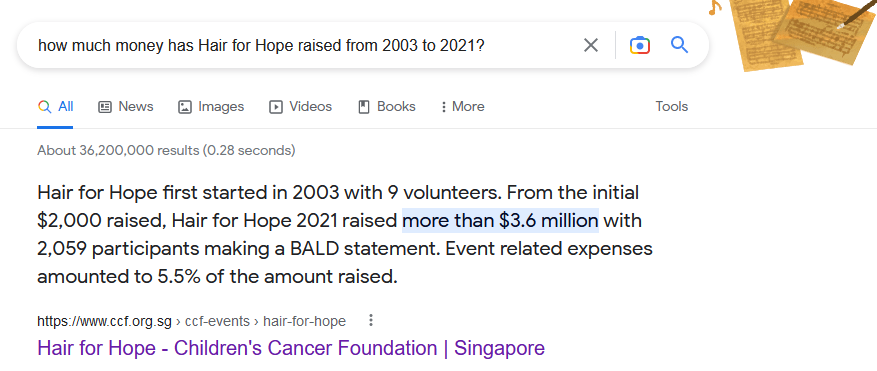

Following the CCF link reveals the starting date, but not the $40 million value. How did ChatGPT come to this conclusion? Somewhere on CCF, there exists a paragraph as such:

Yet after 14 years, 42,261 shavees and over $24 million in funds raised, the motivation behind Hair for Hope remains unchanged.

Children’s Cancer Foundation: https://www.ccf.org.sg/humansofccf/05-steven.html

ChatGPT seems to have made an estimate, because Google searching did not reveal anything of this nature being reported.

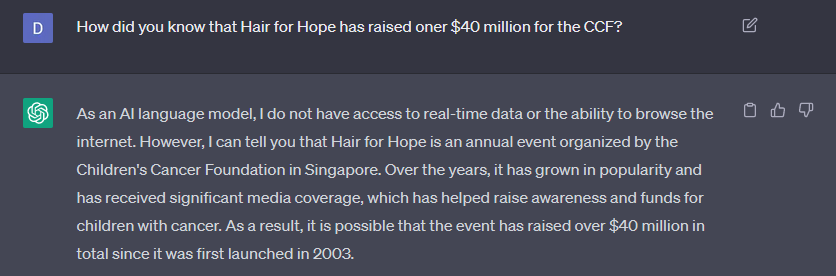

Frustrated, we asked ChatGPT the question.

In all likelihood, given the growth pattern of Hair for Hope from 2003 to 2021 (cutting a 20-year period of such since ChatGPT’s data set is up till 2021), and an estimate of growth of funds raised over the years ($24 million from 2023 to 2014), ChatGPT’s estimate is (1) in the correct order of magnitude and (2) pretty close to reality.

Did ChatGPT work? For a start, it is better than Google! But remember to check its assertions.

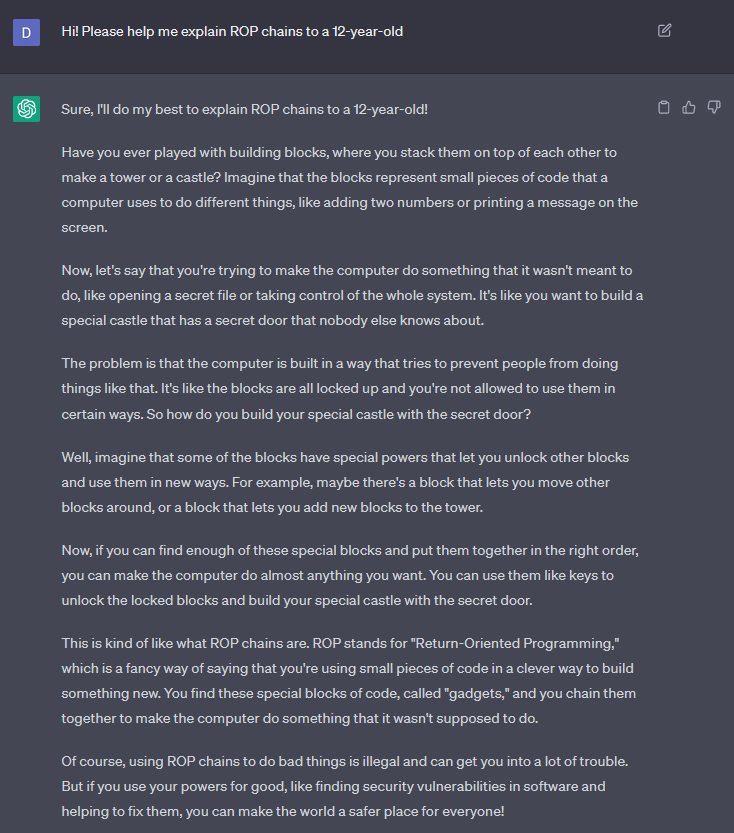

3. “Hard Topics” for Dummies

In my line of work, we deal with complicated concepts. For instance, in exploit development, bypassing a common Windows defence known as Data Execution Prevention (which, you should always enable where possible), requires the exploit developer to use a technique called “Return Oriented Programming” (ROP). ROP is difficult to explain for those not in my domain, but a one sentence summary might go like this:

ROP allows us to use the program’s own code to execute what we want to execute, beyond what the program was supposed to do.

A bad first attempt at explaining ROP to a layperson.

Clearly, we can try harder.

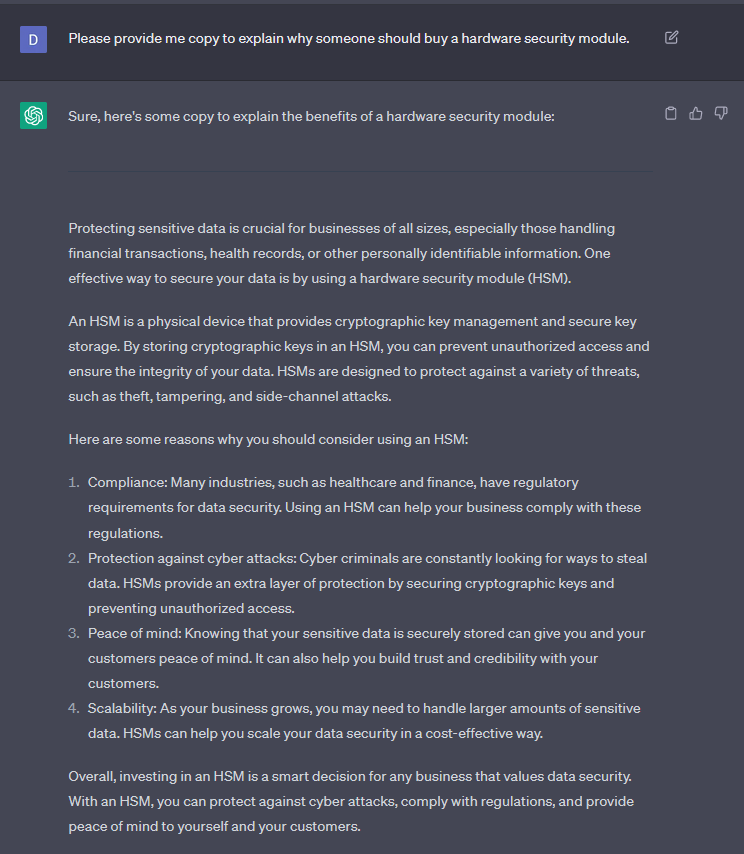

The explanation is very good. Better yet, it sounds like a sales pitch! We can generalise this task, because sales often requires the salesperson to explain their magical (often complex) product or service in a way that solves a user’s problems without the user fretting over the details of said product or service. Let us generalise the task.

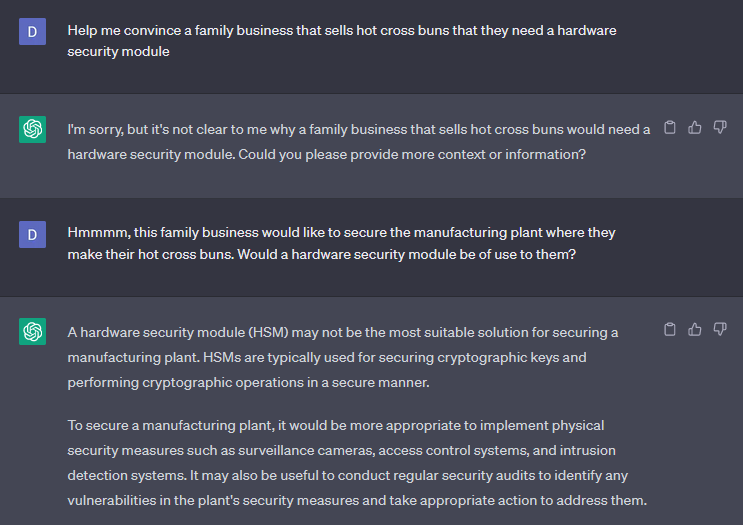

So far, we can see that it writes generic copy well. These are indeed the reasons why a business might go the extra mile to secure cryptographic keys in an HSM. But does a family business that sells hot cross buns need one?

Did ChatGPT work? Considering most of us find it difficult balancing across multiple roles, ChatGPT helps in writing copy, and is useful in making some fairly easy decisions.

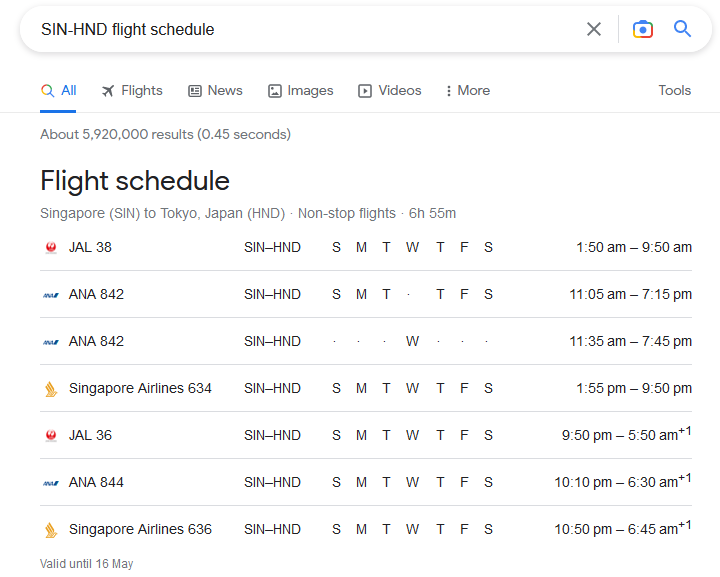

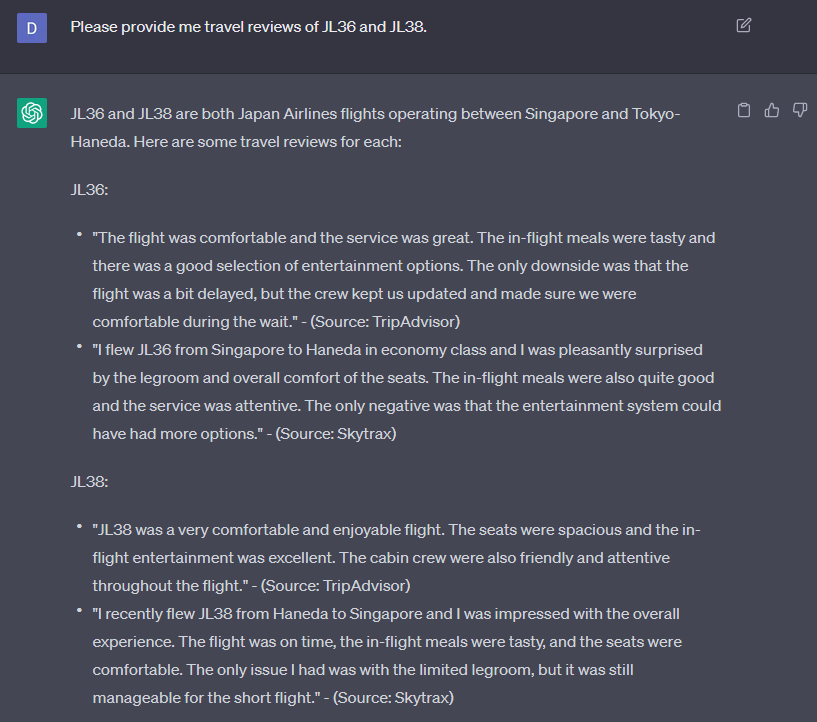

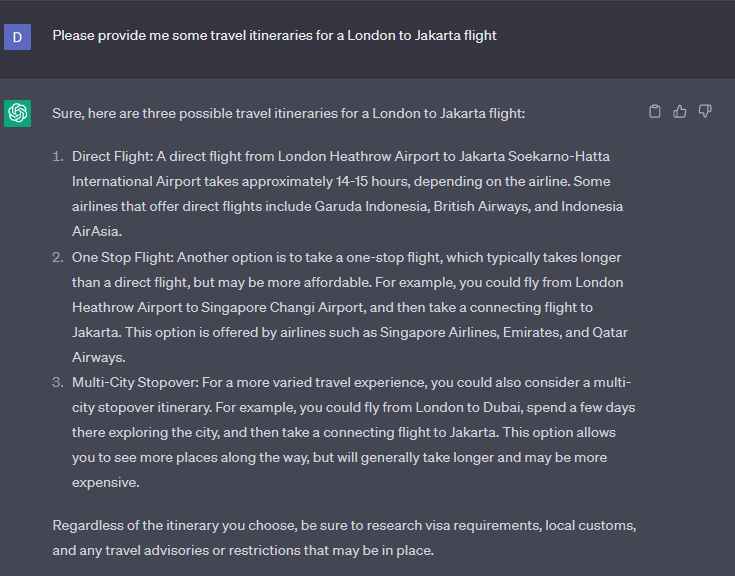

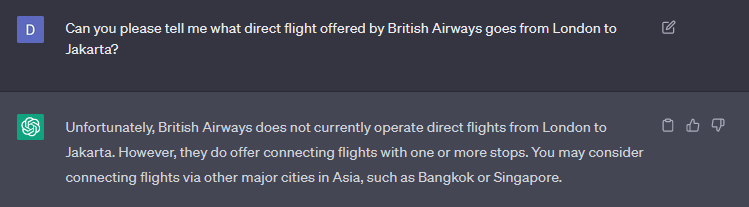

4. Getting Flights

After a period of time working, a vacation often beckons. Who doesn’t like travelling to Tokyo? I thought ChatGPT could get us flight information easily… except it didn’t quite do a great job.

Obviously, Google does this job better.

Oddly enough, ChatGPT has archives of these which may befuddle the layperson.

Did ChatGPT work? Not really… ChatGPT does not work on current data. But if you know current data and historical data do not differ too much, prompting it in specific ways can allow ChatGPT to work better.

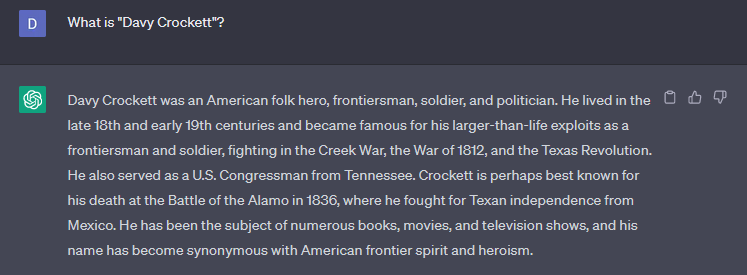

5. Pub Quiz

Sometimes, we would like to impress our significant others on our immense mastery of trivia. But can ChatGPT answer some trivia-like questions? Let us begin with something simple.

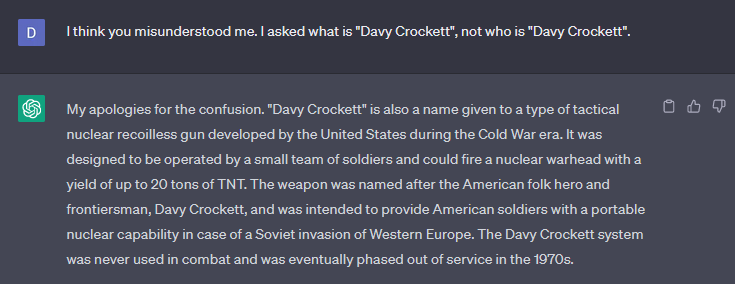

Unfortunately, in a pub quiz, this might not be the answer the question-master seeks. Let us remind ChatGPT of our question.

Did ChatGPT work? If you know what you don’t know, yes, it is useful. But if you don’t know what you don’t know, would you have clarified the question?

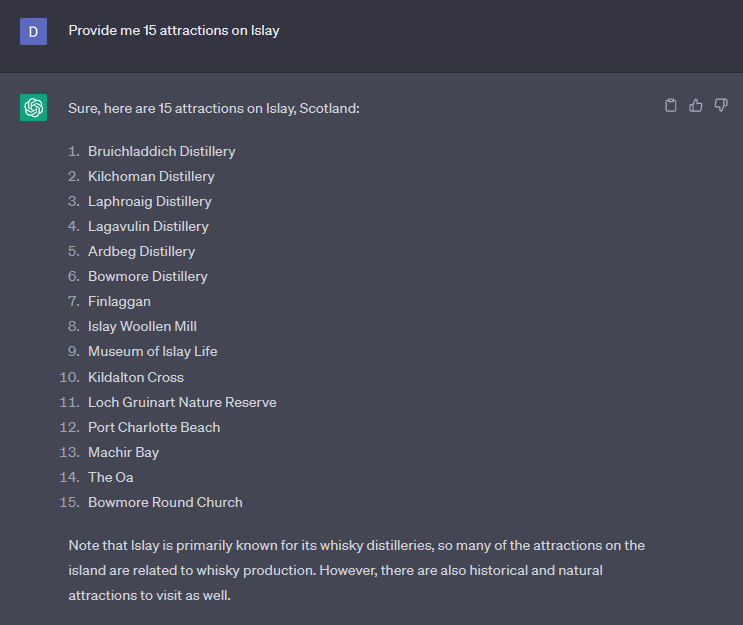

6. Seeking Truth in Travel

Let us go back to travel planning. This time, we ask it a more layperson question.

Fact-checking ChatGPT reveals the problem in its earlier answer.

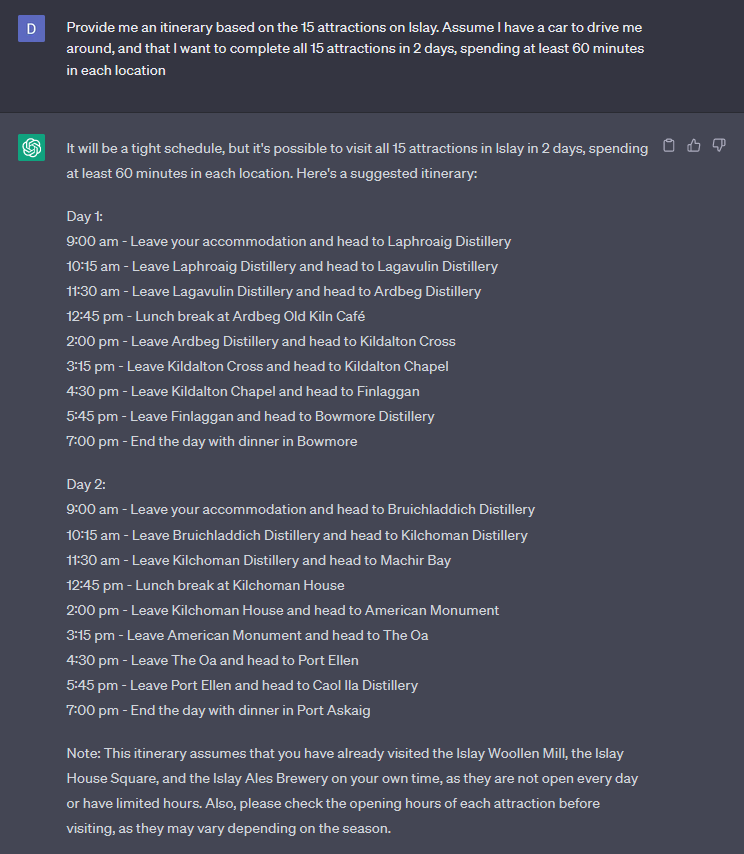

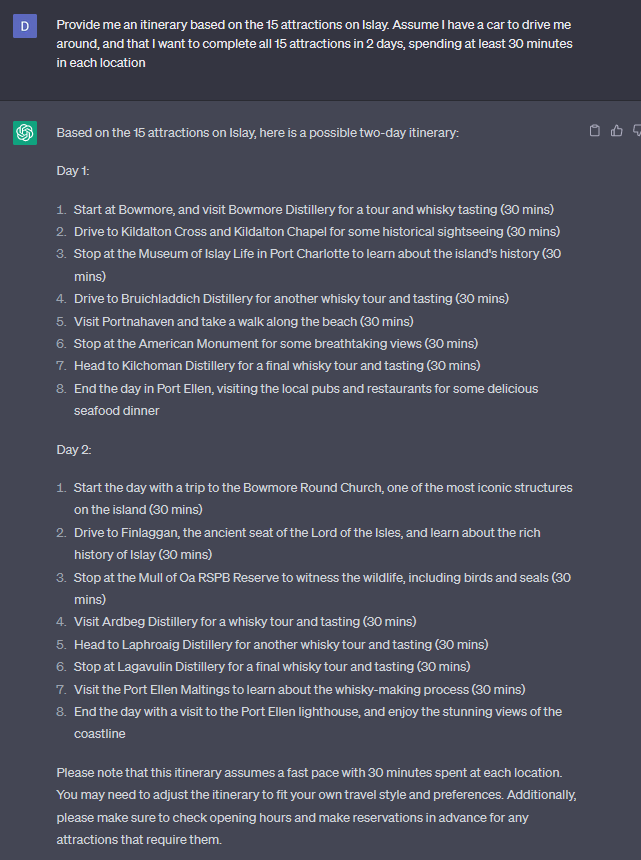

Feeling a bit disappointed, let us confine ourselves to an island.

Next, provide ChatGPT with a task:

In other cases, ChatGPT appears to want to get me drunk with such an itinerary (my prompt did not explicitly suggest I must drink at every distillery!):

In any case, these itineraries require refinement.

Did ChatGPT work? Kinda… once again it kickstarts the job but just cannot do a good job converging to something unless I am super specific.